Nvidia Corporation (NVDA) has long been the dominant player in the AI-GPU market, particularly in data centers with paramount high-compute capabilities. According to Germany-based IoT Analytics, NVDA owns a 92% market share in data center GPUs.

Nvidia’s strength extends beyond semiconductor performance to its software capabilities. Launched in 2006, CUDA, its development platform, has been a cornerstone for AI development and is now utilized by more than 4 million developers.

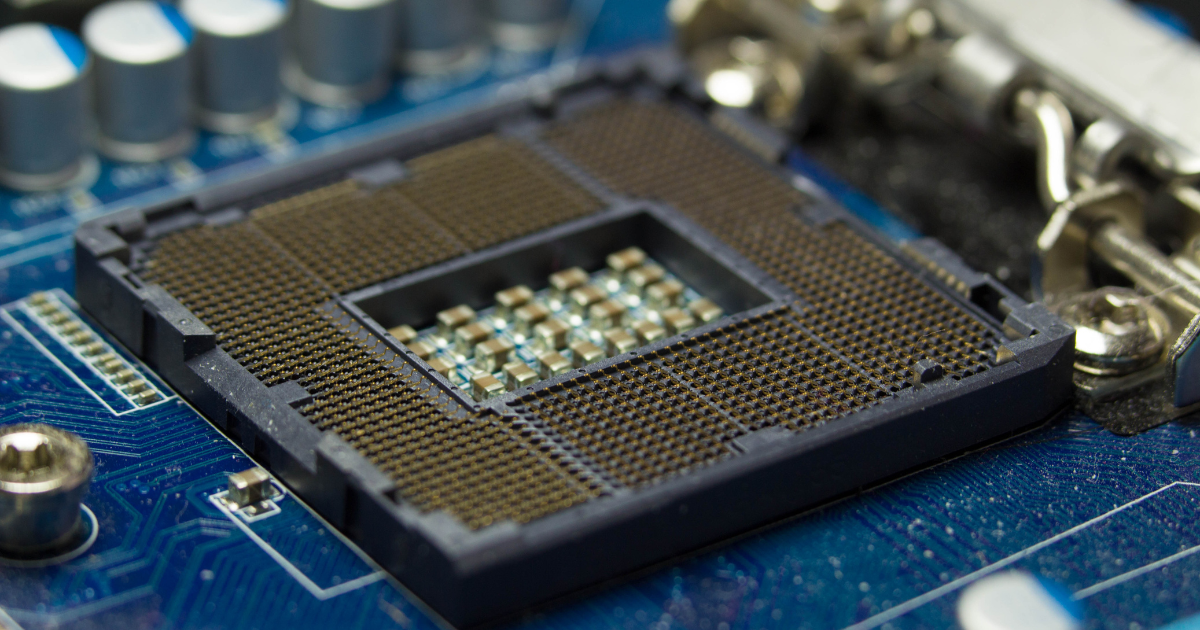

The chipmaker’s flagship AI GPUs, including the H100 and A100, are known for their high performance and are widely used in data centers to power AI and machine learning workloads. These GPUs are integral to Nvidia’s dominance in the AI data center market, providing unmatched computational capabilities for complex tasks such as training large language models and running generative AI applications.

Additionally, NVDA announced its next-generation Blackwell GPU architecture for accelerated computing, unlocking breakthroughs in data processing, engineering simulation, quantum computing, and generative AI.

Led by Nvidia, U.S. tech companies dominate multiple facets of the burgeoning market for generative AI, with market shares of 70% to over 90% in chips and cloud services. Generative AI has surged in popularity since the launch of ChatGPT in 2022. Statista projects the AI market to grow at a CAGR of 28.5%, resulting in a market volume of $826.70 billion by 2030.

However, NVDA’s dominance is under threat as major tech companies like Microsoft Corporation, Meta Platforms, Inc. (META), Amazon.com, Inc. (AMZN), and Alphabet Inc. (GOOGL) develop their own in-house AI chips. This strategic shift could weaken Nvidia’s grip on the AI GPU market, significantly impacting the company’s revenue and market share.

Let’s analyze how these in-house AI chips from Big Tech could reduce reliance on Nvidia’s GPUs and examine the broader implications for NVDA, guiding how investors should respond.

The Rise of In-house AI Chips From Major Tech Companies

Microsoft Azure Maia 100

Microsoft Corporation’s (MSFT) Azure Maia 100 is designed to optimize AI workloads within its vast cloud infrastructure, like large language model training and inference. The new Azure Maia AI chip is built in-house at Microsoft, combined with a comprehensive overhaul of its entire cloud server stack to enhance performance, power efficiency, and cost-effectiveness.

Microsoft’s Maia 100 AI accelerator will handle some of the company’s largest AI workloads on Azure, including those associated with its multibillion-dollar partnership with OpenAI, where Microsoft powers all of OpenAI’s workloads. The software giant has been working closely with OpenAI during the design and testing phases of Maia.

“Since first partnering with Microsoft, we’ve collaborated to co-design Azure’s AI infrastructure at every layer for our models and unprecedented training needs,” stated Sam Altman, CEO of OpenAI. “Azure’s end-to-end AI architecture, now optimized down to the silicon with Maia, paves the way for training more capable models and making those models cheaper for our customers.”

By developing its own custom AI chip, MSFT aims to enhance performance while reducing costs associated with third-party GPU suppliers like Nvidia. This move will allow Microsoft to have greater control over its AI capabilities, potentially diminishing its reliance on Nvidia’s GPUs.

Alphabet Trillium

In May 2024, Google parent Alphabet Inc. (GOOGL) unveiled a Trillium chip in its AI data center chip family about five times as fast as its previous version. The Trillium chips are expected to provide powerful, efficient AI processing that is explicitly tailored to GOOGL’s needs.

Alphabet’s effort to build custom chips for AI data centers offers a notable alternative to Nvidia’s leading processors that dominate the market. Coupled with the software closely integrated with Google’s tensor processing units (TPUs), these custom chips will allow the company to capture a substantial market share.

The sixth-generation Trillium chip will deliver 4.7 times better computing performance than the TPU v5e and is designed to power the tech that generates text and other media from large models. Also, the Trillium processor is 67% more energy efficient than the v5e.

The company plans to make this new chip available to its cloud customers in “late 2024.”

Amazon Trainium2

Amazon.com, Inc.’s (AMZN) Trainium2 represents a significant step in its strategy to own more of its AI stack. AWS, Amazon’s cloud computing arm, is a major customer for Nvidia’s GPUs. However, with Trainium2, Amazon can internally enhance its machine learning capabilities, offering customers a competitive alternative to Nvidia-powered solutions.

AWS Trainium2 will power the highest-performance compute on AWS, enabling faster training of foundation models at reduced costs and with greater energy efficiency. Customers utilizing these new AWS-designed chips include Anthropic, Databricks, Datadog, Epic, Honeycomb, and SAP.

Moreover, Trainium2 is engineered to provide up to 4 times faster training compared to the first-generation Trainium chips. It can be deployed in EC2 UltraClusters with up to 100,000 chips, significantly accelerating the training of foundation models (FMs) and large language models (LLMs) while enhancing energy efficiency by up to 2 times.

Meta Training and Inference Accelerator

Meta Platforms, Inc. (META) is investing heavily in developing its own AI chips. The Meta Training and Inference Accelerator (MTIA) is a family of custom-made chips designed for Meta’s AI workloads. This latest version demonstrates significant performance enhancements compared to MTIA v1 and is instrumental in powering the company’s ranking and recommendation ads models.

MTIA is part of Meta’s expanding investment in AI infrastructure, designed to complement its existing and future AI infrastructure to deliver improved and innovative experiences across its products and services. It is expected to complement Nvidia’s GPUs and reduce META’s reliance on external suppliers.

Bottom Line

The development of in-house AI chips by major tech companies, including Microsoft, Meta, Amazon, and Alphabet, represents a significant transformative shift in the AI-GPU landscape. This move is poised to reduce these companies’ reliance on Nvidia’s GPUs, potentially impacting the chipmaker’s revenue, market share, and pricing power.

So, investors should consider diversifying their portfolios by increasing their exposure to tech giants such as MSFT, META, AMZN, and GOOGL, as they are developing their own AI chips and have diversified revenue streams and strong market positions in other areas.

Given the potential for reduced revenue and market share, investors should re-evaluate their holdings in NVDA. While Nvidia is still a leader in the AI-GPU market, the increasing competition from in-house AI chips by major tech companies poses a significant risk. Reducing exposure to Nvidia could be a strategic move in light of these developments.